The Cartoon GAN work stream laid the foundation for GAN integration at Instagram as it was the first of its kind to use machine learning to power to create unique custom tailored visual augmentations of a users face in real time. The effect uses GAN technology to augment the users face into a stylized, cartoon version of themselves.

Effects and experiences powered with Generative Adversarial Networks provide a unique and custom tailored experience for each user. Magical experiences that might have taken teams of experts, time, and resources to accomplish can now be automated and occur in real-time.

Cartoon GAN Effect

The dataset collection problem

To provide a consistent experience across many different users, a viable art input dataset must have a clear and distinguishable uniformity in style or appearance. However, a high bar for quality in experience and clear identity preservation in the user requires very large data sets with a broad range of variation. Due to the cost, time, and resources needed to create these massive datasets, as well as not having a clear definition of what exact data and how much data we need, the task was daunting. So our approach was to start small, learn, and adapt as we went. With only a three person internal team, we turned to our vendor (Buck LA) to create a very highly curated character creator rig for us.

We had to define what the scope of our asks would be without blowing our budget. Characters with enough variation of facial features, skin/hair/eye pigments and expressions to encompass the entirety of the human experience.

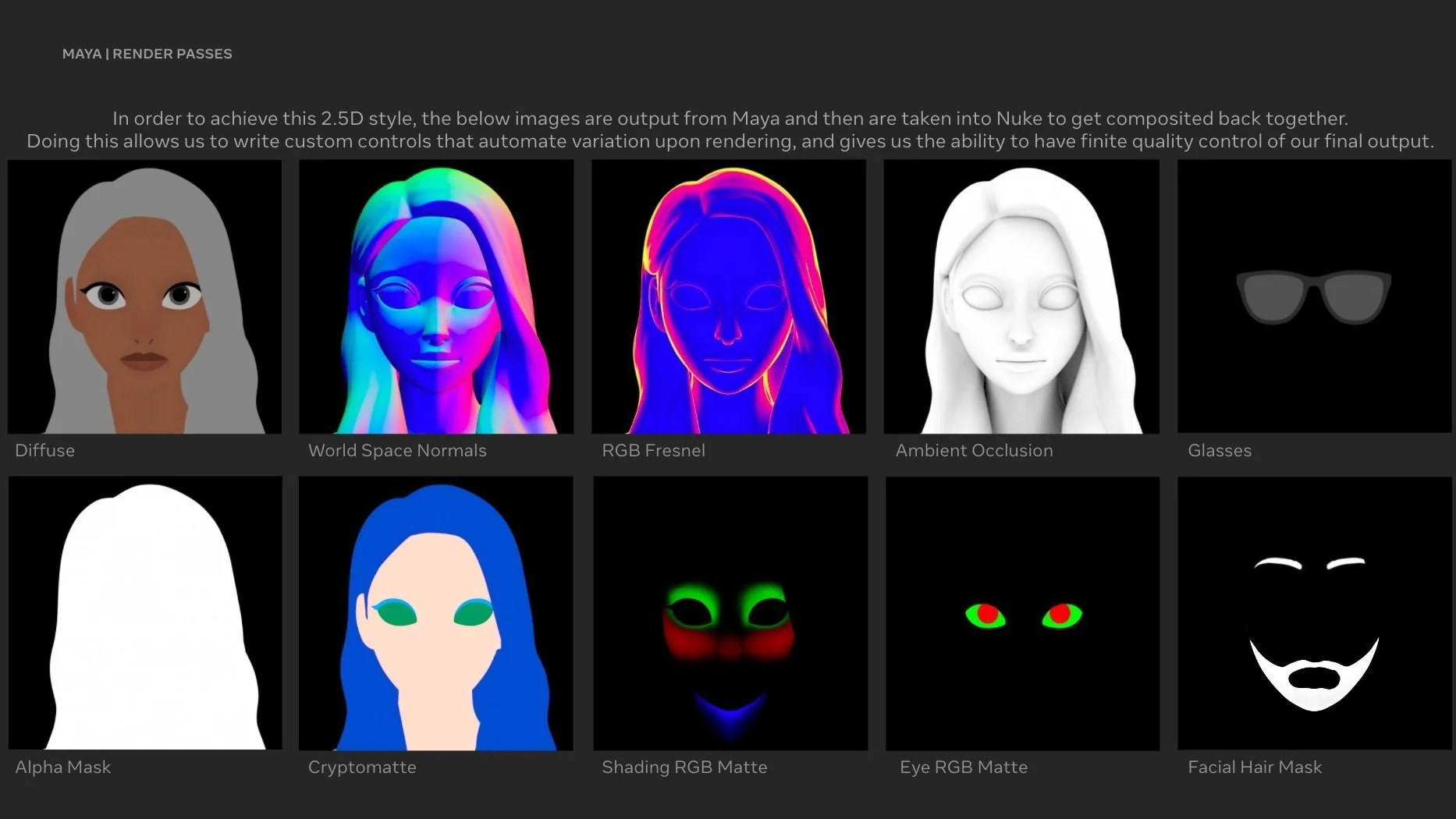

I was the 3D Lead as well as a Designer on this work stream and it was my job to figure how to optimize our 3D asks for the large amount of data we needed to deliver, while also building out an infrastructure that could remain flexible for our learnings. All the while keeping the system efficient enough for our 3 person team to ingest after the initial lift from our vendor.

Below I have laid out images from various states of our process and would be happy to get into the nitty gritty with you on a call.

First Round Rig

Final Rig

Corrective Dampen Rig controls to allow one rig to fit for multiple ‘characters.’ Made by Buck.

Animation Design

First Round Animation + Pigment Variants

Final Round Anim | 500 Image Dataset

Final Round Anim | 10,000 Image Dataset

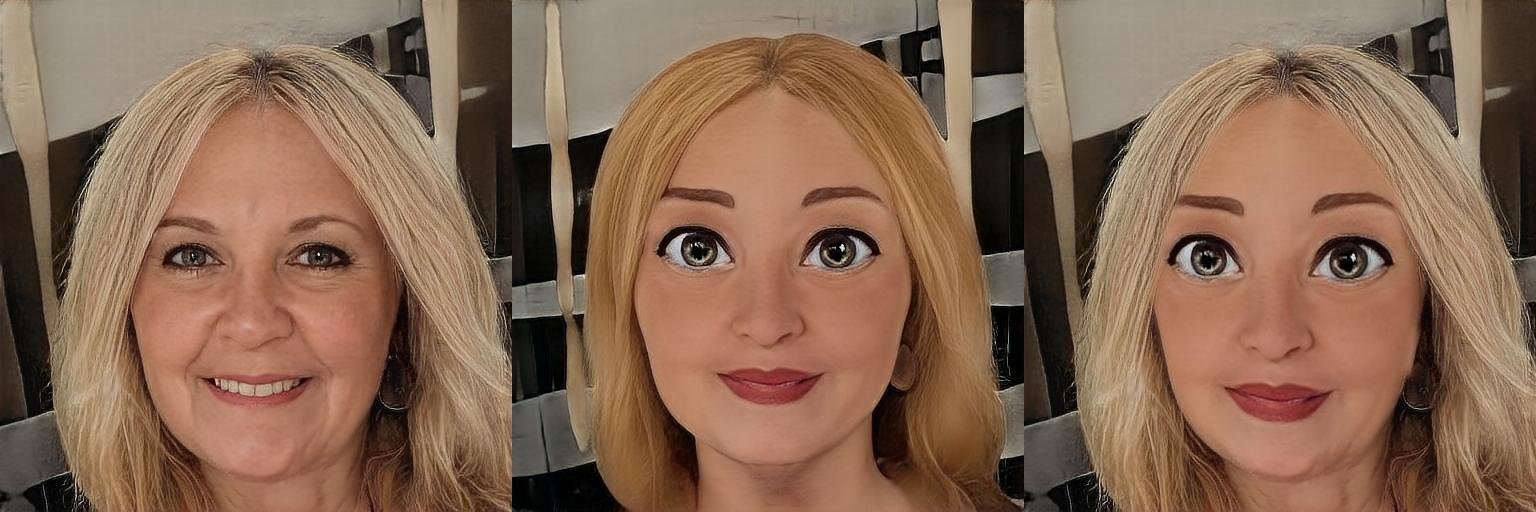

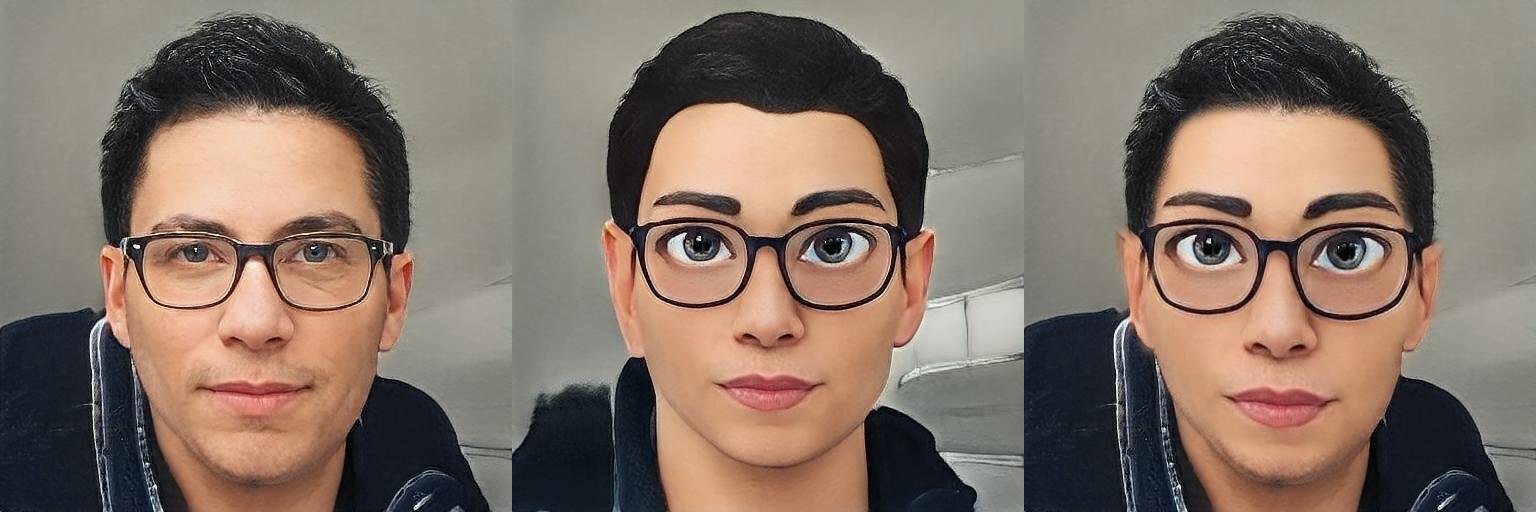

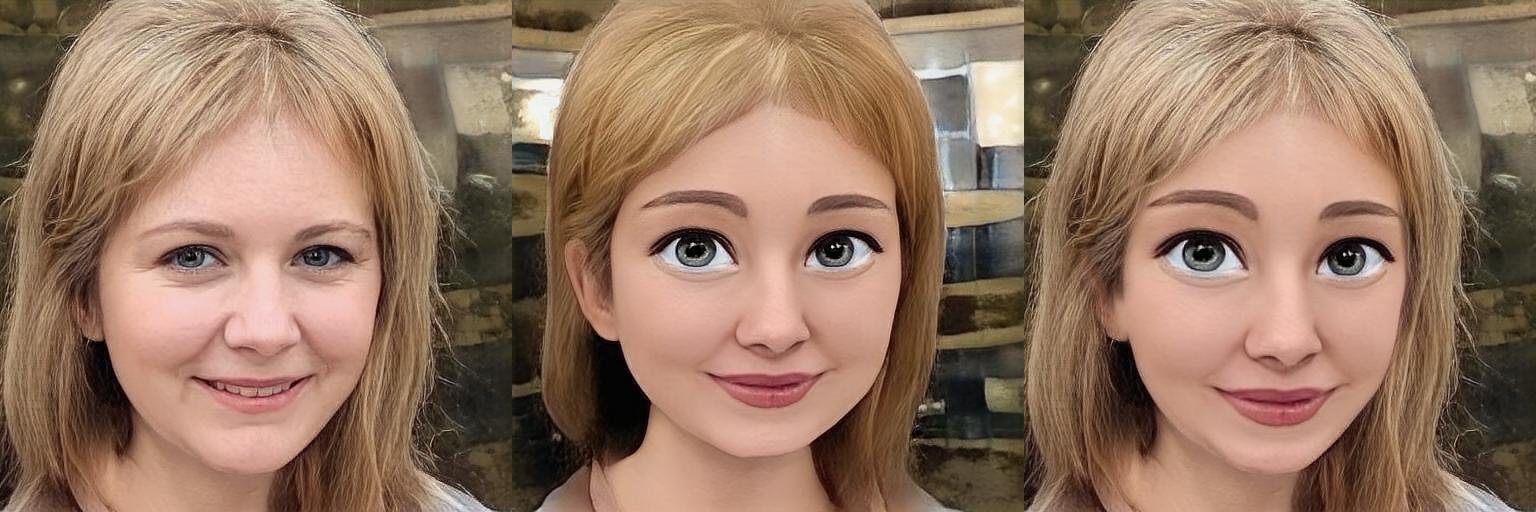

The first stage

is blending the stylized or ‘toon’ data set

into the photo real dataset

The trained output then gets applied to the users face, as a tracked 2D texture, in real time.

Above was the last improvement of the model before resources were pulled from this space. Our partners at XR Tech continued to refine temporal consistency and identity preservation until the very end. The plan was to have our two highest priority parameters pass our quality evaluation, then re-implement accurate eye and mouth actions (ie. blinking, speaking, & emoting). We faced many hurdles but learned soo much over the course of this project.

In the end, this particular model wasnt passing our quality evaluation for all users skin tones, and if it wasn’t going to work for the entirety of our user base, then it wasn’t going to work for us.

We took our learnings and the model we developed and decided to ship a much lighter series of Emotive GAN effects, the first being ’Smile.’

As of December 2022, Smile had an average conversion rate of 11.42%

Smile had a total share DAP of 2,592,211 making it the 24th most popular 1P effect

(by the metric of 156 1P effects with a share DAP > 5000)

So, in the end, we called that a win. We werent able to directly ship our initial and ambitious concept as our first machine learning powered effect, but all of that work was leveraged to create something that our users did very much enjoy.

I am excited, not only, for the future of GAN powered effects but also thoroughly exhilarated at this cross section of technology and design that we currently find ourselves in. Moving forward, I endeavor to bring more projects like this one out into the world.